The Curse of the Diaeresis

Table of Contents

Introduction

What happens when a user wants to search your website for a word such as this…

cooperate

…but some instances are stored as this:

coöperate

Or, if that is a bit too esoteric, consider any searchable data set where some words may contain accents:

Zoë

señor

église

Lech Wałęsa (the leader of Solidarność)

and even this one:

Motörhead

Acknowledgement: The title of this post was stolen from a New Yorker article, published back in 2012:

These accents (or more generally, diacritics) can interfere with your search results.

There are also other traps for the unwary:

-

Ligatures - such as the

æin dæmon, or the Germanßin straße (street). -

The humble ampersand (&). What if it’s not used consistently -

&in some places, butandin others? -

Letters which may get replaced by easier-to-type alternatives - for example, the Danish philosopher Søren Kierkegaard. Do we see Søren or Soren in our data set? That diagonal line through the

ois not a diacritic - in Danish and Norwegian, these are two different letters. -

Symbols. Your keyboard may well have a key for the U.S. dollar sign (

$), or pound sterling (£), or Euro (€) - but does it have¢for cents, or¥for the Japanese yen? What does your data set use? Just acor aY?

How can we handle these cases, to help searchers find what they are looking for?

A Basic Scenario

In this scenario, let’s take the word église (French for “church”) as our example. We want a user to be able to enter any one of these words as their search term:

eglise Eglise église Église

And we want their search to find text which matches any of the the above words. That is, we want our results to contain all occurrences of the word, regardless of whether it starts with e, é, E or É.

Upper/lower case differences are trivial to handle - so we will ignore those, here. But remember to test for these cases.

Normalization and Decomposition

Before we look at a coding solution to our problem, we have to discuss Unicode normalization and decomposition.

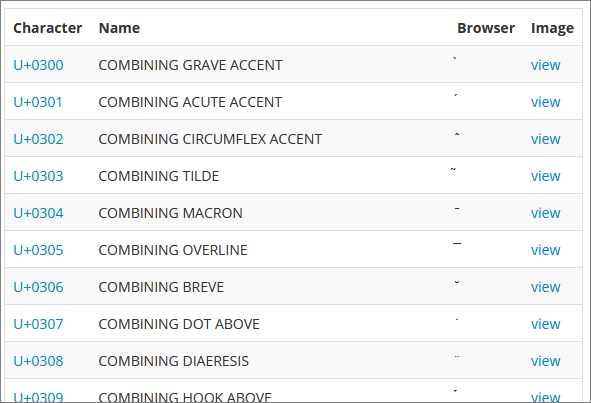

The Unicode standard defines a range of values which represent diacritic marks:

https://www.fileformat.info/info/unicode/category/Mn/list.htm

The abbreviation for this class is Mn - which is important because it can be used in regular expressions (see below).

The marks in this class can be combined with unaccented characters to create an accented character. So, for example, the letter é is represented in Unicode as:

Unicode Character é (U+00E9)

But it can also be decomposed in Unicode into this:

Decomposition: e (U+0065) - ◌́ (U+0301)

That is, the letter e combined with the acute accent mark.

The process of decomposing a Unicode character results in a Unicode normalized form of that character.

There are four normalization algorithms defined in Unicode. In summary, they are:

NFD - canonical decomposition. Basically, what we see in the “decomposition” example above. A string containing é will become a string containing e followed by the acute accent. Unicode specifies the set of rules to be followed for canonical decomposition.

NFC - canonical decomposition followed by canonical composition. A string will go through NFD transformation, followed by the reverse. Doing this guarantees you won’t be left with any decomposed characters (which may have existed in the original string).

NFKD - compatibility decomposition. This includes canonical decomposition rules, plus additional decomposition mapping rules - for example, to transform the single (ligature) character fi into two characters f and i.

NFKC - compatibility decomposition, followed by canonical composition. So, in this case, the fi character will remain split into the two characters f and i. It will not be recombined.

Handling Our French Church in Java

We can make our search work the way we want by reducing all our text to unaccented letters. So if the search term is église, we will first transform it to eglise in a two-step process:

- Decompose the search term into a normalized string.

- Remove accents from the string.

We have to do step (1) in order to do step (2).

Step 1: Java handles text normalization using the java.text.Normalizer class. The normalize method takes an input string and a normalizer (one of the four described above).

Step 2: In Java, we use the regular expression Unicode class p{Mn} to find and remove unwanted diacritic marks.

|

|

Now we can take our transformed string and use it as our search term.

This is crying out for a set of unit tests, to verify it does what we want. We might want to trim the string, as well. It depends on how our search actually works.

Half Way There…

We’re still only half way to our solution.

The data we are searching also needs to be brought into line. Any words which contain diacritics need to go through the same decomposition and removal processes as the search term. Here, however, we don’t want to alter the text in the searchable data - we just want to add to it.

For example, if our searchable data contains the phrase…

Ancienne église priorale Saint-Divitien

…then our searchable data also needs to include the unaccented word “eglise”. But we need to leave the original phrase intact, for obvious reasons.

Exactly how we implement this depends on our specific scenario. Are we searching for word tokens, or for full phrases…? Depending on the answer, one approach is to scan all our searchable text, and store all the affected (changed) words in a separate field, not displayed to users:

Visible and searchable: Ancienne église priorale Saint-Divitien

Hidden, but also searched: eglise

Simply put: You need to supplement the searchable text to help your users find what they need.

Refinements

The above code uses NFD as its normalizer, and Mn as the class of Unicode marks to remove. We may prefer to use NFKD (e.g. for ligatures) - and M instead of Mn. The regex p{M} covers three different classes of Unicode marks:

Mn - non-spacing marks - as already described above

Mc - combining spacing marks

Me - enclosing marks (you’ll almost certainly never need these!)

And remember those traps I mentioned at the start of this post? Some of those may need custom handlers, if they are an issue for you.

Client-Side Search

We may be using client-side searching - e.g. in a tool such as DataTables. There are JavaScript equivalents for the Java processing described above. An equivalent JavaScript code for my Java example above is this, using the normalize function:

|

|

In my case I was not able to use the p{Mn} regular expression in my JavaScript. Perhaps it’s not supported by the regex engine I was using. Or perhaps I was doing it wrong. Either way, I was able to use the Unicode range.

Lucene/Solr, ElasticSearch and ASCII Folding

A final note.

What I’ve been describing above is somewhat home-made. One line of supporting code, assuming you are implementing search yourself, in your application.

If you’re using a reasonably robust full-text search framework, then it almost certainly already has the ability to do what I’ve been describing - and a whole lot more.

For example, take a look at Lucene’s AsciiFoldingFilter class.

That covers a lot more ground than my one-liner above.

But their basic approach is the same as mine (or, mine is the same as theirs!). When Lucene builds a full-text index, you specify how the index will handle its data. For example, should it be indexing text after performing ASCII folding? And if the answer is “yes” then Lucene needs to use the same ASCII folding transformation on the user’s search terms.

I’ll take a closer look at this in another post.