Converting File Formats Using Python 3

I recently needed to convert text files created by one application into a completely different format, to be processed by a different application.

In some cases, the files were too large to be read into memory all at once (without causing resource problems). Here “too large” meant tens or hundreds of gigabytes.

The source files also did not always have line terminators (some did; most did not). Instead they had separately defined record layouts, which specified the number of fields in a record (with data types), together with the field terminator character.

I therefore needed to (a) find a way to read a source file as a stream of characters, and (b) define how the program would identify complete records, by counting field terminators.

The heart of the solution, written in Python 3, was based on the following:

|

|

So, what is iter(callable, sentinel)?

The documentation introduces the functionality:

iter(object[, sentinel])If the second argument,

sentinel, is given, thenobjectmust be a callable object. The iterator created in this case will callobjectwith no arguments for each call to its__next__()method; if the value returned is equal tosentinel,StopIterationwill be raised, otherwise the value will be returned.

What is a “callable object” in Python? Anything which can be called - such as a function, a method and so on.

Why is a callable object needed? Because we want the Python built-in function iter() to iterate over something which is not naturally iterable - a file, in this case, with no line terminators. Another example, would be a file containing binary data.

Therefore we have to wrap our source data in something which can be treated as if it were iterable.

Using a lambda expression for our callable is a convenient and succinct piece of syntax. It lets us read the file one chunk at a time (each chunk containing 4,096 characters).

Regarding the sentinel, the technique used in the above sample code sets the sentinel to an empty string - and therefore the entire chunk of data is processed in one pass, since the sentinel is never encountered.

Each resulting chunk is a string of text, and a string is naturally iterable in Python. We can therefore use for ch in chunk to iterate over that string, one character at a time.

So, instead of reading the entire file into memory all at once, we only need to read in much smaller chunks, one after another.

Acknowledgement: The solution is based on information taken from the following SO question and its answers:

What are the uses of iter(callable, sentinel)?

Below is the full solution, which shows how the script counts field terminators.

Note that while the input file uses field terminators (a control character at the end of every field), the output file is written with field separators (a control character in between each field) together with a line terminator (the usual Linux ‘\n’ control character).

|

|

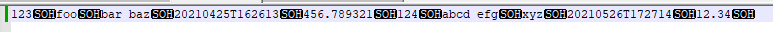

An example (very small) test file for the above input file spec:

Here we see that there are no line separators, only a SOH control character at the end of each field. Therefore, more typical approaches such as using file.readline() will not work here.

There are certainly alternative approaches - this is not the only way to get the job done.

For example, you could use file.read(chunk_size), something like this:

|

|